How to Setup Eclipse to Run Spark Programs Using Gradle as Build Tool

This post is to show detailed demo of setting up Eclipse with Gradle to run Spark Programs

This post will give a walk through of how to setup your local system to test Spark programs. We will use Gradle as build tool. Additionally we will see how to run the same code using spark-submit command.

Prerequisites

- Eclipse (download from here)

- Scala (Read this to Install Scala)

- Gradle (Read this to Install Gradle)

- Apache Spark (Read this to Install Spark)

Setup Eclipse and Run Sample Code.

Clone the repo from this GitHub Repo

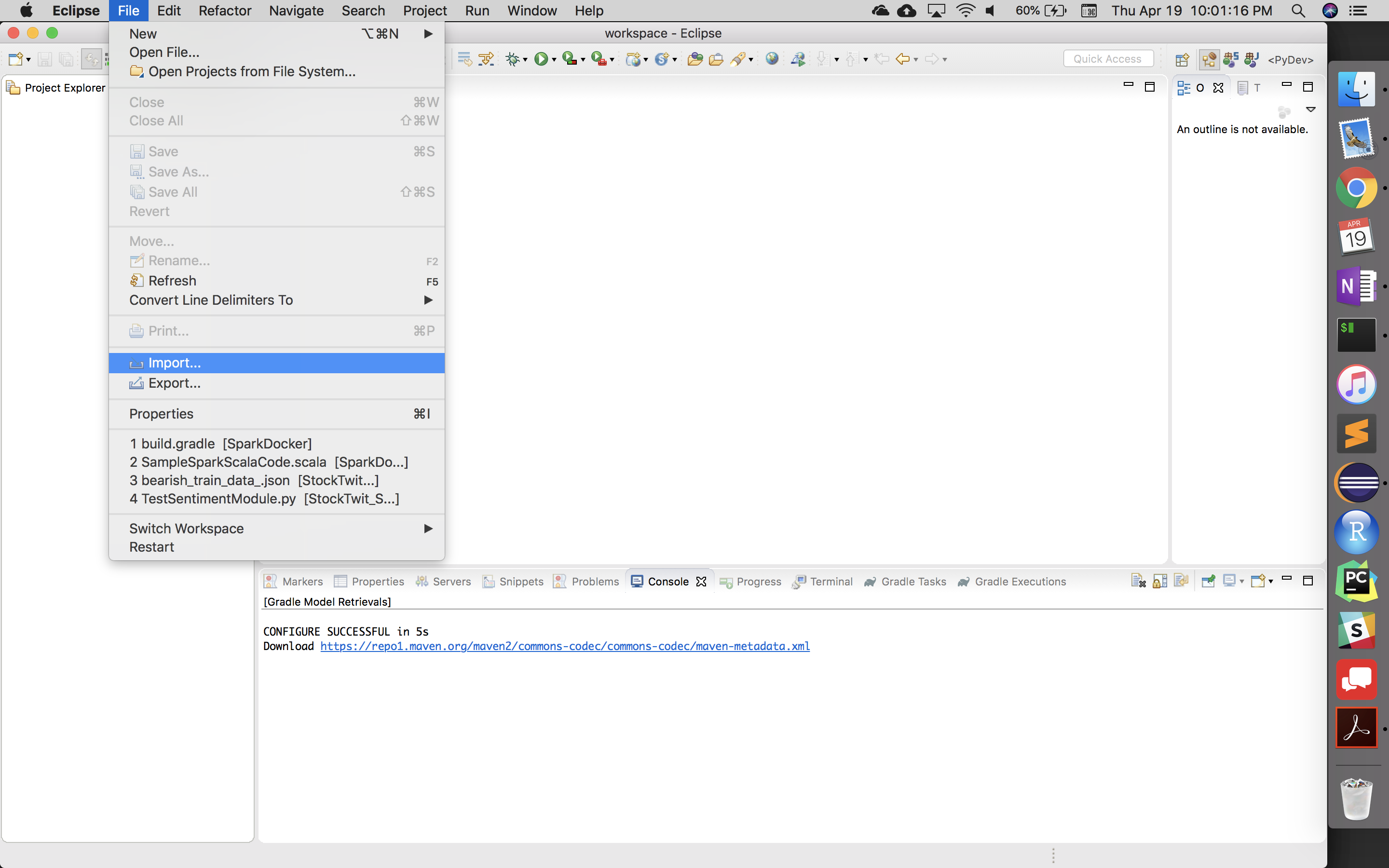

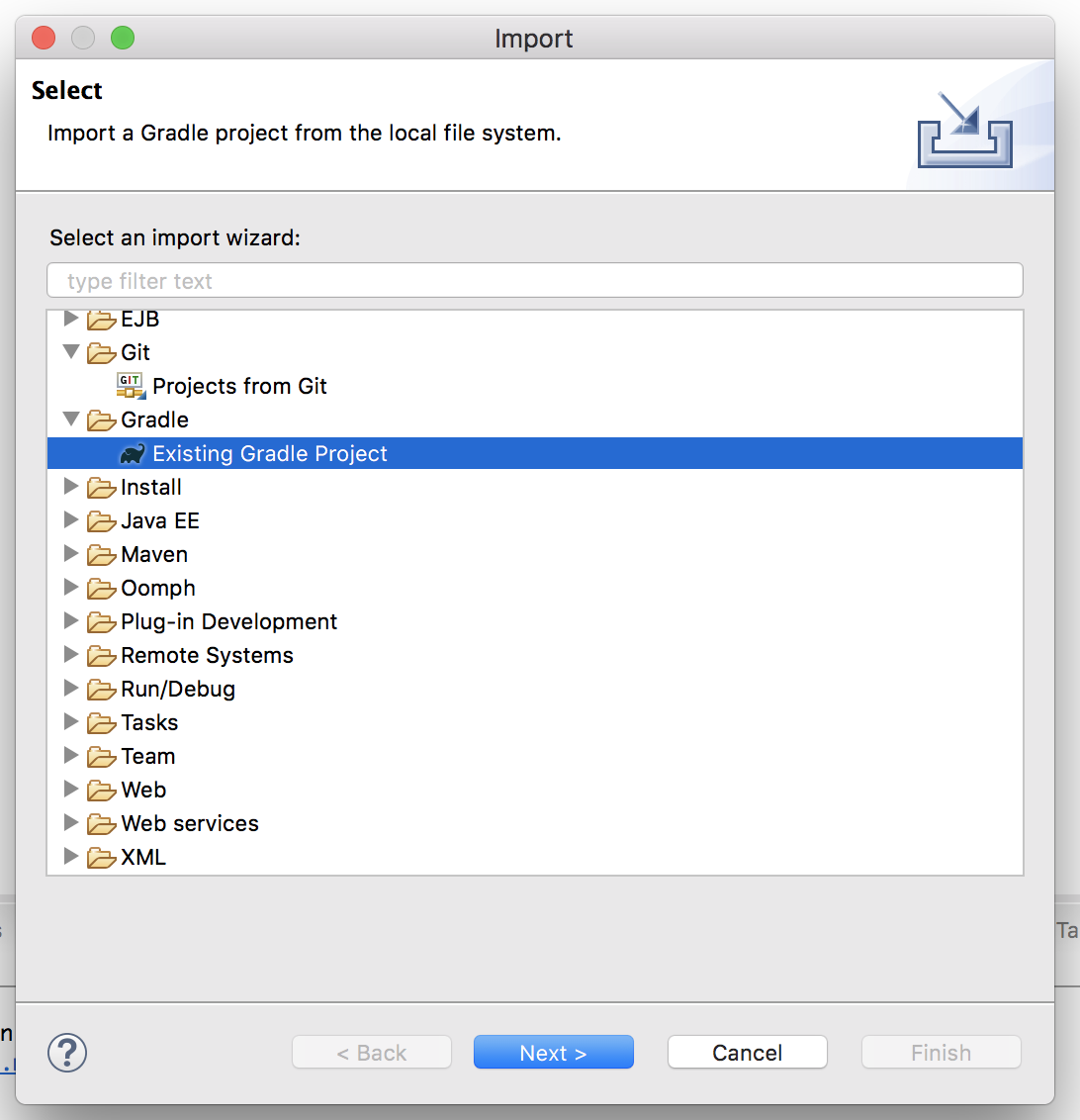

Open eclipse. Go to File –> Import –> Gradle –> Existing Gradle Project

Select Gradle in the next window.

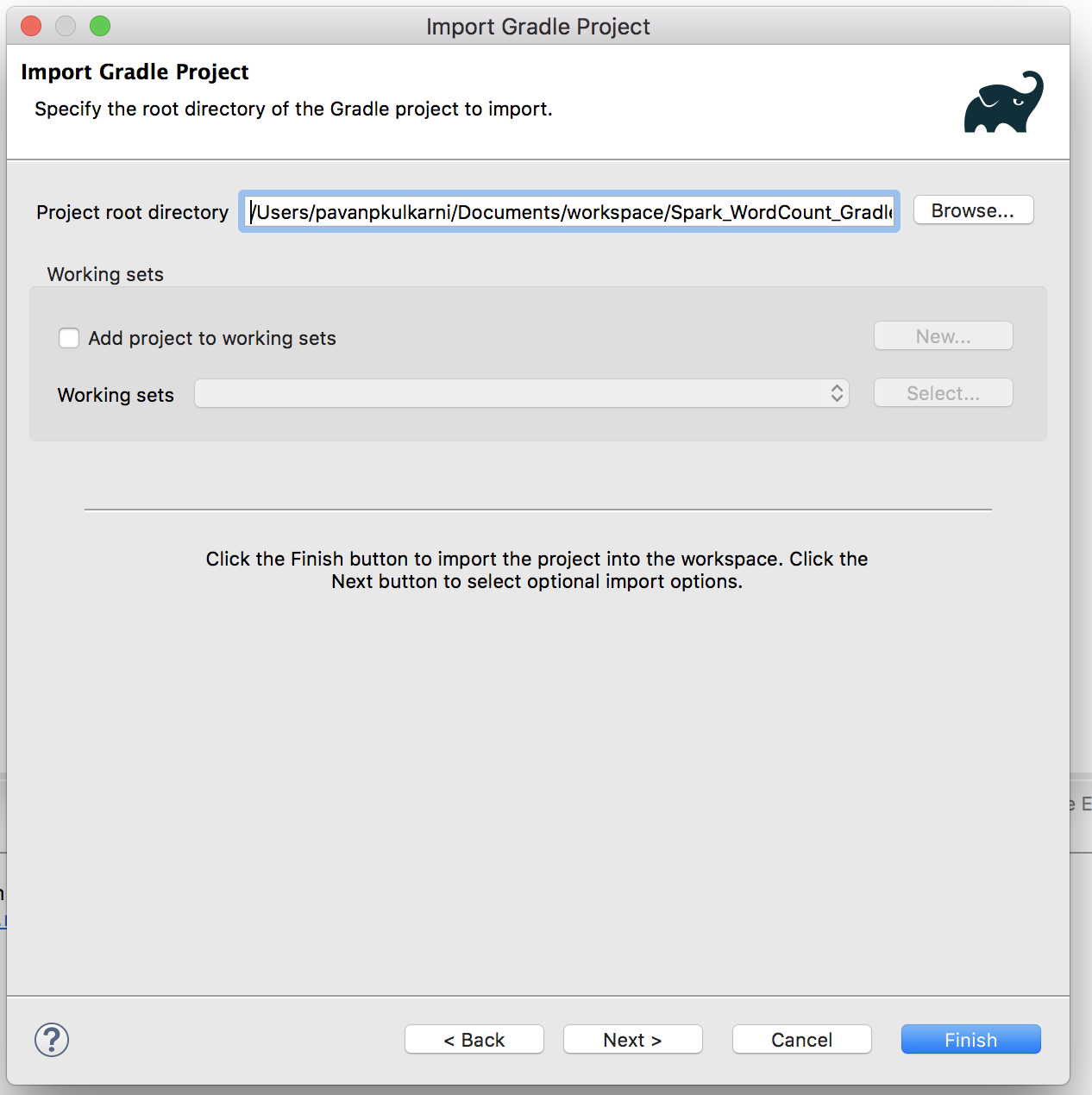

Add the root directory as the directory where you cloned the GitHub Repo. Click on next

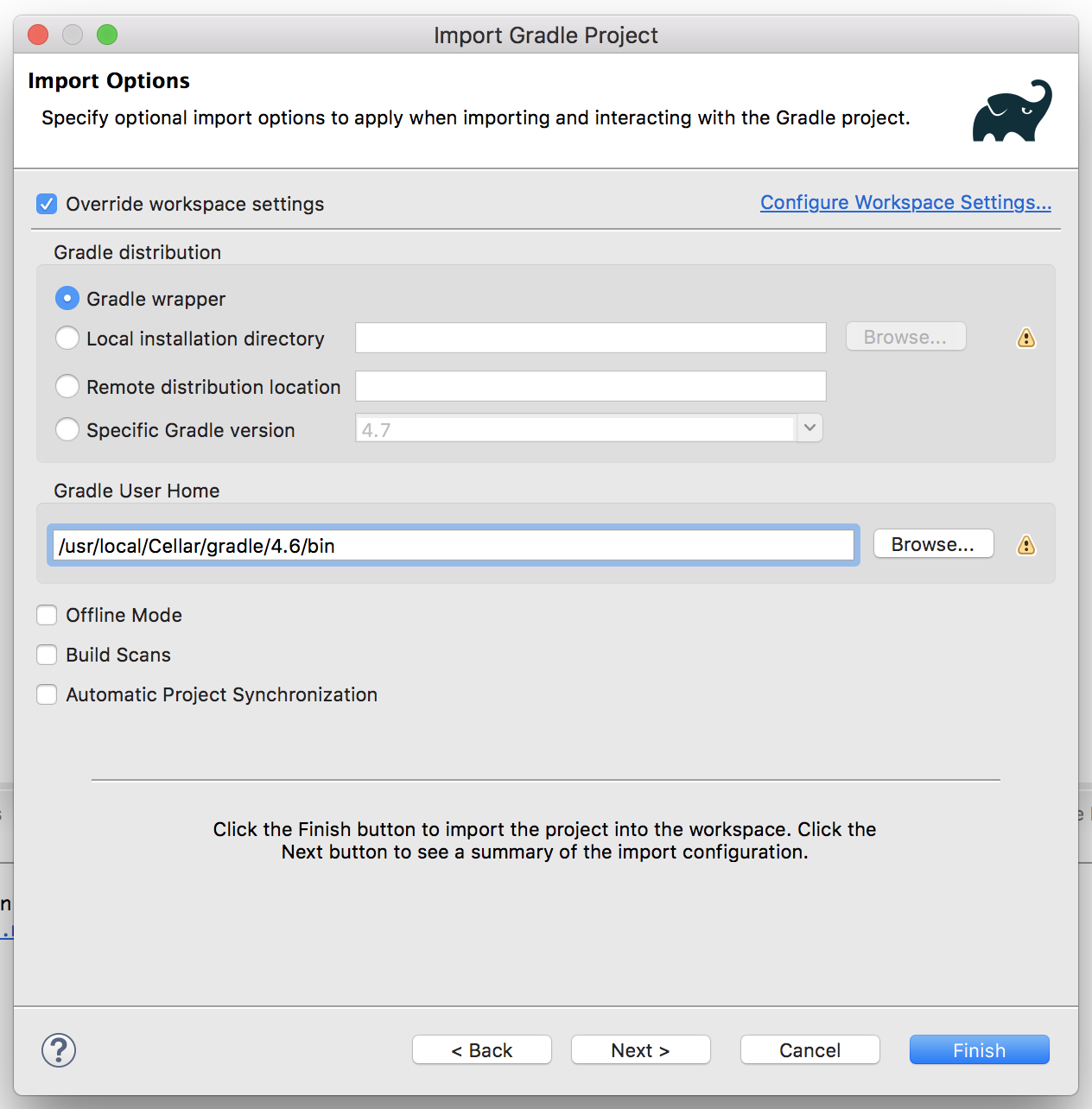

We set up Eclipse to read the installed Gradle. We will refer to Gradle that was installed using

homebrew. Refer this post) to see how to install gradle usinghomebrew

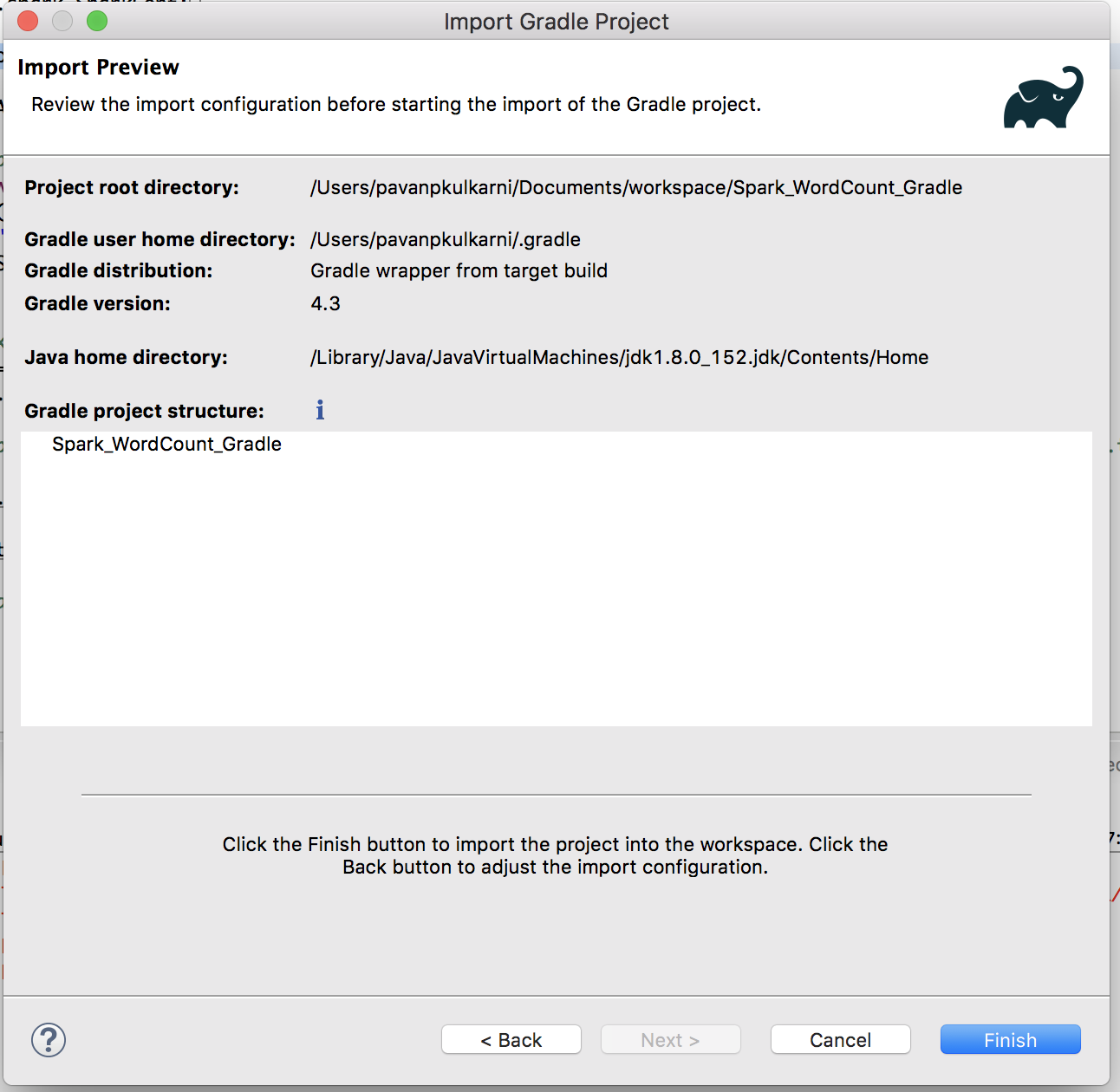

Upon successful gradle import, you should see this window

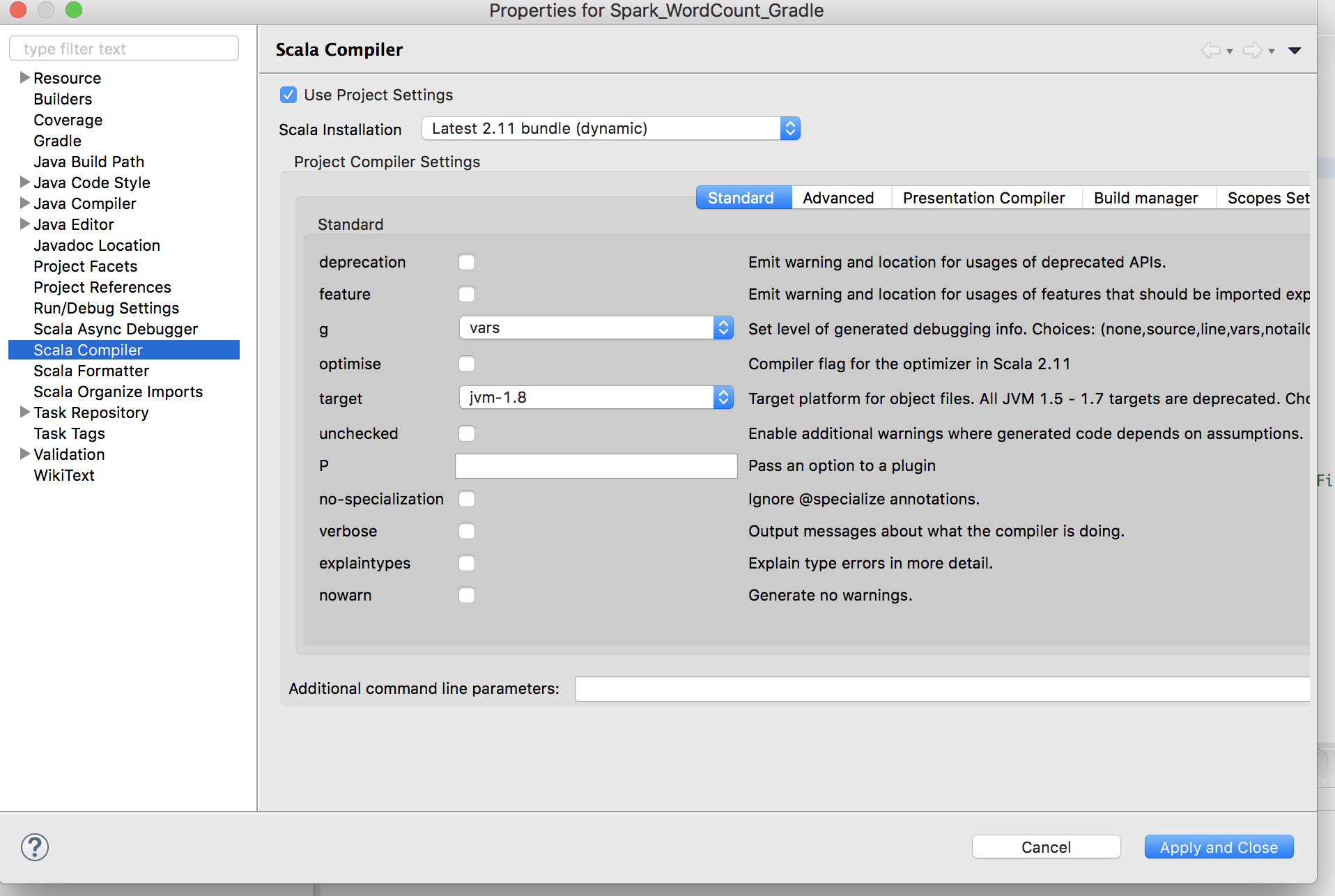

My eclipse was defaulted to Scala 2.12. Since, Scala 2.12 version of Apache Spark drivers is not available on official maven repo site. So, we will have to downgrade our eclipse to Scala 2.11.

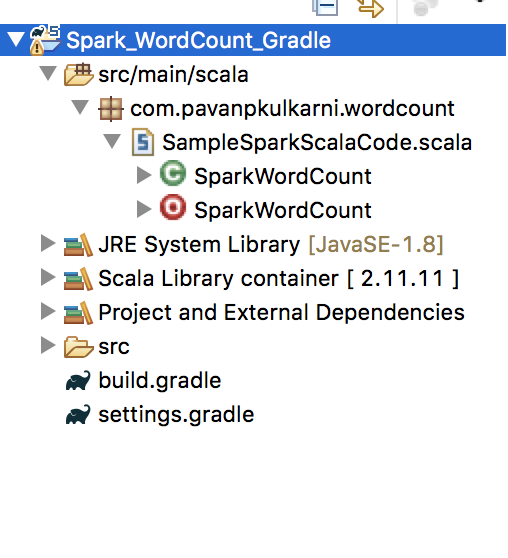

Once everything is in place, your should see the project structure as below. Many a times, eclipse will complain about Scala file not being executable. In such cases, you will have to explicitly add “Scala Nature” to your project by doing “Right Click on Project –> Configure –> Add Scala Nature”

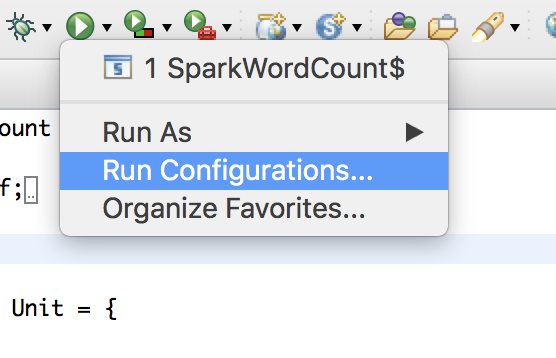

Add input file name in Run Configurations

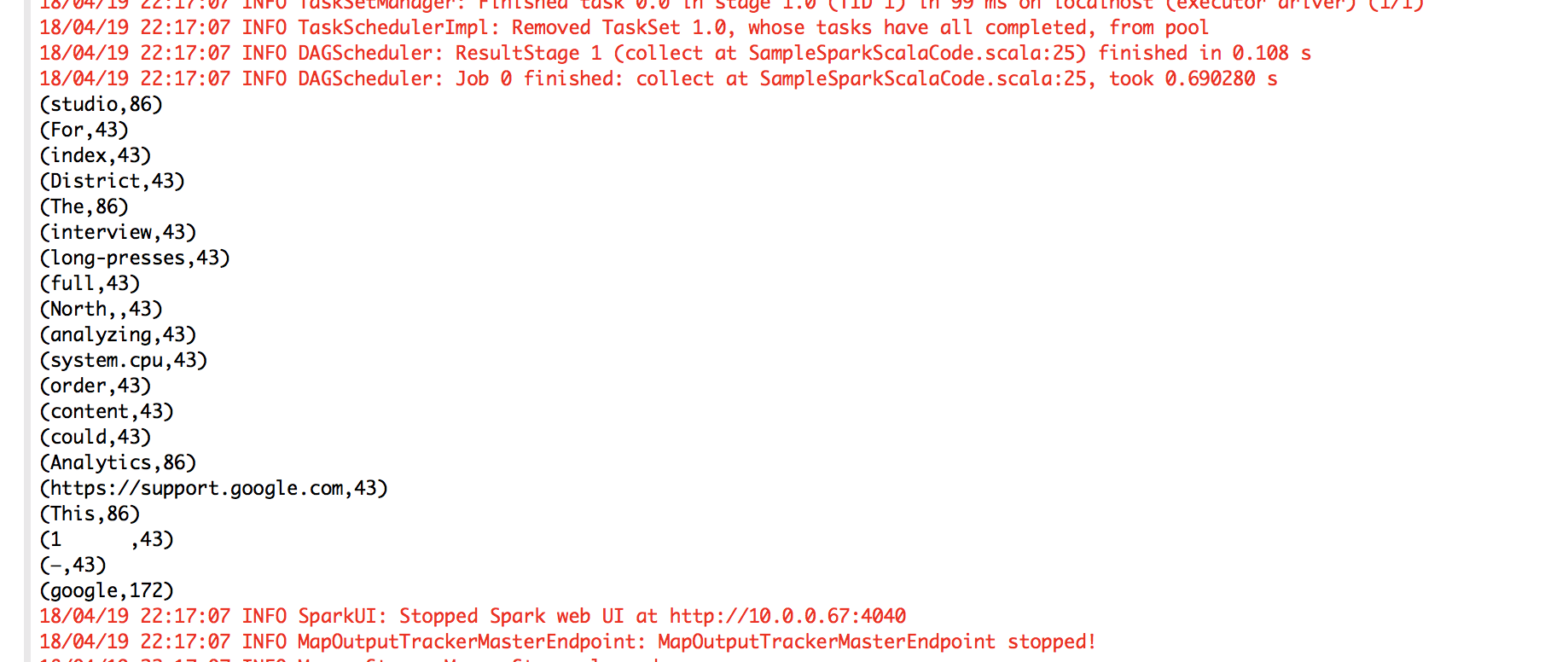

To run the project, Right Click –> Run As –> Scala Application. You should see a sample output as below

Run with spark-submit Command

Change directory to root of the project

Pavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$ pwd /Users/pavanpkulkarni/Documents/workspace/Spark_WordCount_Gradle Pavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$ ls -ltr total 360 -rw-r--r-- 1 pavanpkulkarni staff 979 Apr 19 00:15 build.gradle drwxr-xr-x 3 pavanpkulkarni staff 96 Apr 19 22:08 src -rw-r--r-- 1 pavanpkulkarni staff 657 Apr 19 22:09 settings.gradle drwxr-xr-x 3 pavanpkulkarni staff 96 Apr 19 22:14 bin -rw-r--r--@ 1 pavanpkulkarni staff 174511 Apr 19 22:16 data.txtRun

gradle clean buildto build the jar for our wordcount projectPavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$ gradle clean build Starting a Gradle Daemon (subsequent builds will be faster) > Task :compileScala Pruning sources from previous analysis, due to incompatible CompileSetup. BUILD SUCCESSFUL in 18s 3 actionable tasks: 2 executed, 1 up-to-dateSince I had already run the

gradle clean build, I get the above message. If its, your first time, it will take a while to download all the dependencies before showing BUILD SUCCESSFUL message.Spark_WordCount_Gradle-1.0.jar will be generated under the

builddirectory. This directory will be created after runninggradle clean buildcommand.Pavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$ ls -ltr build/* build/tmp: total 0 drwxr-xr-x 2 pavanpkulkarni staff 64 Apr 19 23:23 compileScala drwxr-xr-x 3 pavanpkulkarni staff 96 Apr 19 23:24 scala drwxr-xr-x 3 pavanpkulkarni staff 96 Apr 19 23:24 jar build/libs: total 16 -rw-r--r-- 1 pavanpkulkarni staff 6489 Apr 19 23:24 Spark_WordCount_Gradle-1.0.jar build/classes: total 0 drwxr-xr-x 3 pavanpkulkarni staff 96 Apr 19 23:24 scala Pavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$Run the

spark-submitas belowPavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$ spark-submit --master local[4] --verbose --class com.pavanpkulkarni.wordcount.SparkWordCount build/libs/Spark_WordCount_Gradle-1.0.jar "data.txt" Using properties file: null Parsed arguments: master local[4] deployMode null executorMemory null executorCores null totalExecutorCores null propertiesFile null driverMemory null driverCores null driverExtraClassPath null driverExtraLibraryPath null driverExtraJavaOptions null supervise false queue null numExecutors null files null pyFiles null archives null mainClass com.pavanpkulkarni.wordcount.SparkWordCount primaryResource file:/Users/pavanpkulkarni/Documents/workspace/Spark_WordCount_Gradle/build/libs/Spark_WordCount_Gradle-1.0.jar name com.pavanpkulkarni.wordcount.SparkWordCount childArgs [data.txt] jars null packages null packagesExclusions null repositories null verbose true . . . . . . (studio,86) (For,43) (index,43) (District,43) (The,86) (interview,43) (long-presses,43) (full,43) (North,,43) (analyzing,43) (system.cpu,43) (order,43) (content,43) (could,43) (Analytics,86) (https://support.google.com,43) (This,86) (1 ,43) (—,43) (google,172) 2018-04-19 23:33:35 INFO AbstractConnector:318 - Stopped Spark@384fc774{HTTP/1.1,[http/1.1]}{0.0.0.0:4040} 2018-04-19 23:33:35 INFO SparkUI:54 - Stopped Spark web UI at http://10.0.0.67:4040 2018-04-19 23:33:35 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped! 2018-04-19 23:33:35 INFO MemoryStore:54 - MemoryStore cleared 2018-04-19 23:33:35 INFO BlockManager:54 - BlockManager stopped 2018-04-19 23:33:35 INFO BlockManagerMaster:54 - BlockManagerMaster stopped 2018-04-19 23:33:35 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped! 2018-04-19 23:33:35 INFO SparkContext:54 - Successfully stopped SparkContext 2018-04-19 23:33:35 INFO ShutdownHookManager:54 - Shutdown hook called 2018-04-19 23:33:35 INFO ShutdownHookManager:54 - Deleting directory /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/spark-4e65152d-af0d-43e9-8a02-b592cb95a46e 2018-04-19 23:33:35 INFO ShutdownHookManager:54 - Deleting directory /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/spark-e1d7509e-79da-40ba-8c4a-64a45745e19f Pavans-MacBook-Pro:Spark_WordCount_Gradle pavanpkulkarni$

Web UI Available at - http://10.0.0.67:4040

You can look up other ways yo submit Spark application here.

Share this post

Twitter

Google+

Facebook

LinkedIn

Email